Edge Computing for RIS

-

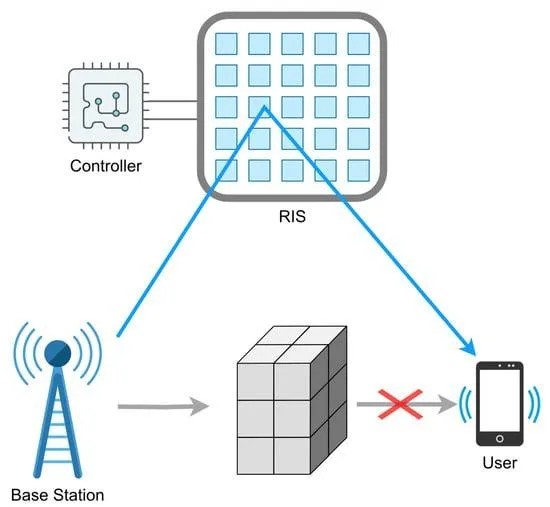

Reconfigurable Intelligent Surfaces

The number of elements is closely related to the resolution achieved in the target angle by the RIS device and depends on the number of phase shifts each cell can perform. The simplest cell is a binary cell, which allows two-phase shifts, 0∘ and 180∘, coded in a single bit. In any case, the availability of more phase-shift levels implies better resolution at the cost of higher complexity in the computational problem of Reconfigurable Intelligent Surfaces (RIS) configuration.

-

Why On The Edge

This study proposes a novel approach to compute Reconfigurable Intelligent Surfaces (RIS) configurations from data derived from target angles, in which a signal must be redirected using a RIS device whose configuration is inferred by AI algorithms. This derived information can contain large volumes of data and, furthermore, the computational load can be intensified as the size of the target RIS increases. Consequently, sending all these data to be processed in a server and having the RIS configuration sent back to the device or devices modifying the RIS setup could result in significant data bandwidth, along with notable data latency. As a result, this approach might not be efficient in meeting real-time requirements. Considering all this, the use of edge devices becomes essential to mitigate latency and reduce data bandwidth effectively.y

-

Target Edge Devices

you can find the full article here.